It's hard to keep up with studies of the energy impact of generative AI, so here are nine takeaways from the sources I have personally found most illuminating.

It's hard to keep up with studies of the energy impact of generative AI, so here are nine takeaways from the sources I have personally found most illuminating.

This list aims for a "just the facts" approach that sidesteps the dueling interpretations of AI champions and critics. I'm also using one set of measures rather than comparing apples to oranges, specifically:

- 💡watt-hour (running an incandescent light bulb for 1 minute)

- 🥤liter (about a quart)

- ☔️cubic centimeter (a raindrop).

This list assesses only current energy and water usage, and not growth scenarios or actual environmental impact. I'm neither a climate scientist nor an electrical engineer; these are only my rough estimates based on academic studies, industry reports, or back-of-the envelope calculations. It's unclear how some future tradeoffs will play out, eg whether improved efficiencies will cancel out increased demand. This list also excludes numerous potential AI downsides apart from environmental risks, which you can find explained in the IMPACT RISK framework.

I welcome suggestions of research that updates or contradicts these findings. You can find a log of recent updates here.

9 takeaways from recent research

- 🙈1. Lack of transparency by AI companies means usage calculations at this point are only estimates.

- 🌍2. Water and energy impacts are extremely localized; eg the stress on Ireland's water and grid is much higher than Norway's due to the latter's hydropower and cool climate.

- 🔌3. Large models consume disproportionately more energy and water than smaller ones.

- 🏋️4. Training consumes more energy and water than inference (prompting) but is a one-time cost and thus 30% of the overall footprint (EPRI 2024).

- 🏛5. Policy changes by new administrations can result in more or less climate impact for the same energy consumption.

- 📊6. Data centers are currently 3% of global energy demand (EPRI 2024, Goldman Sachs 2024).

- 🪙Crypto is responsible for somewhere around 25% of the energy used by data centers.

- 📱Social media and data usage currently consume most of the rest.

- 🤖AI is responsible for 15% of data center energy demand, ie 3% x 15% = 0.45% of global demand (EPRI 2024).

- 💻7. Prompting a local model on a laptop requires no water and uses less than 10% of the energy of prompting a model in a data center.

- 🚰8. Cooling a data center requires about 4 cubic centimeters of water per watt-hour regardless of task (World Resources Institute 2020, Lawrence 2024).

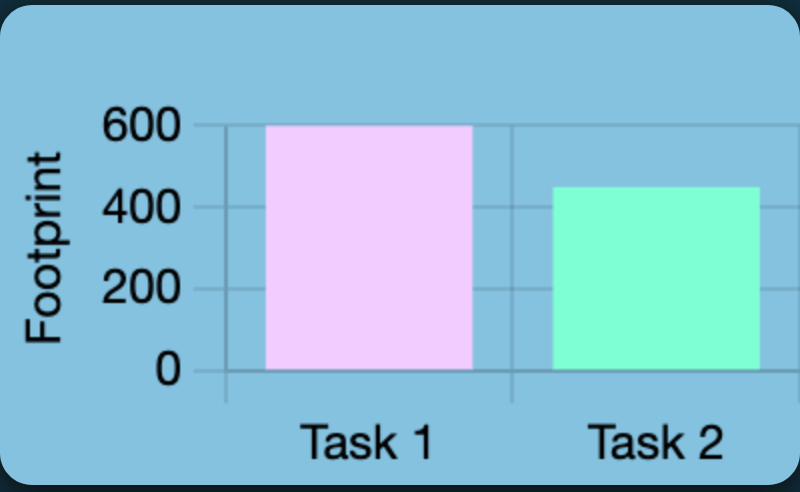

⚖️9. The impact of watching Netflix or joining a Zoom call can rival the largest impacts of AI generation. To compare the energy and water use of AI and non-AI tasks, visit our new app What Uses More.

⚖️9. The impact of watching Netflix or joining a Zoom call can rival the largest impacts of AI generation. To compare the energy and water use of AI and non-AI tasks, visit our new app What Uses More.

⚠️ Please do not quote any of these figures without this caveat: "These are guesses based on incomplete and often contradictory sources."

Sources

For an updated list of sources, see the Google Sheet associated with the What Uses More app.

Public domain cooling tower photograph via picryl.com (CC0).