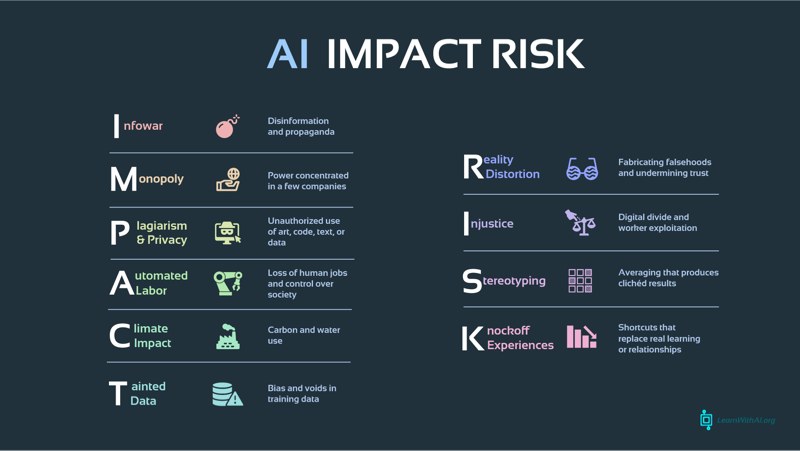

Along with the benefits of generative AI come a varied array of harms—enough that it can be hard to remember all of them. The IMPACT RISK acronym offers a mnemonic so you or your students can take them into account.

By keeping the IMPACT RISK framework in mind, we can navigate the AI revolution more thoughtfully, examining whether AI can be a force for progress rather than a Pandora's box of unintended consequences.

Infowar

AI-powered information warfare threatens to undermine democracy, from deep fakes of high-profile figures like President Biden to personalized attacks on local figures like high school principals.

The ease of generating customized code and text has made cyberattacks cheaper and faster to deploy, whether writing custom malware to spearphishing emails.

Monopoly

A handful of Silicon Valley titans occupy the generative AI market, with Google vying for dominance against the OpenAI-Microsoft partnership and Meta prevailing when it comes to open-source models.

This concentration of power is even more evident in the AI chip market, where Nvidia's dominance leaves competitors scrambling for crumbs. The massive amount of computation required to compete in this market has led to brittle monopolies that can stifle entrepreneurship and innovation.

Plagiarism / Privacy

Artists, musicians, and authors are suing AI companies for training their models on copyrighted works, sometimes churning out knockoffs that resemble their work. On the privacy front, facial recognition software can trawl the internet for your face, turning privacy into a relic of the past.

Automated Labor

While few jobs may be completely replaced by robots, companies looking to cut costs can maintain productivity by relying on senior employees armed with AI. That means fewer openings for junior lawyers and copywriters and fewer freelance opportunities for designers and composers.

Meanwhile high-stakes decisions, whether on Wall Street or on the battlefield, are being outsourced to algorithms. Without sufficient human oversight, sudden shifts in job markets due to automation could destabilize economies faster than they can adapt.

Climate Impact

By 2027, the AI sector could eat as much energy per year as the Netherlands; protesters from Oregon to Uruguay are targeting data centers that suck up drinking water from local reservoirs. Feverish to win the race to roll out ever-bigger generative AI models, Microsoft and Google have fallen well behind their carbon reduction goals, accelerating the threat of climate change. View AI's energy and water use compared to other technologies.

Tainted Data

The ghosts of human bias haunt our AI systems. Resume screeners discriminate against women and non-white applicants; facial recognition systems struggle with darker skin tones, potentially resulting in a self-driving car failing to identify a Black pedestrian.

Even tools meant to identify AI-generated essays can incorrectly flag non-native English speakers. These aren't just glitches; they're mirrors reflecting society's inequalities.

Reality Distortion

AI's outputs are often disconnected from reality, with potentially dangerous consequences. Bing's Sydney chatbot told a New York Times reporter to leave his wife for her, while ChatGPT accused a law professor of sexual harrassment.

These confident-sounding fabrications, or "hallucinations" as they are sometimes misleadingly called, don't have to be deliberate disinformation to cause harm. Unscrupulous users can now generate low-quality news articles—several per minute—that can be hard to distinguish from human-written posts. Persistent exposure to AI-generated distortions may erode trust in authentic sources of information over time or simply bury them in a mountain of digital debris.

Injustice

While Silicon Valley reaps the rewards of AI, the human cost is often hidden. Kenyans making $1 an hour are suffering emotional trauma while flagging detailed descriptions of murder, rape, and child sex abuse generated by chatbots. Meanwhile, in the Congo, children as young as seven are mining cobalt for our AI-powered devices.

In government, powerful AI companies might influence regulations in their favor, perpetuating inequality in the market. In education, the subscription costs of better large language models raise concerns about a new digital divide.

Stereotyping

Generative AI often devolves to clichés when answering questions. Ask an image generator for a "doctor," and you'll likely get a middle-aged white man in a lab coat. Request an "African village," and it might depict mud huts and sunsets.

These outputs are not simply the result of biased data. At a deeper level, they reflect the probabalistic nature of large language models, which return the "average" result from a prompt. Nevertheless, by surfacing stereotypes rather than outliers, AI might favor dominant cultures and languages, leading to the erosion of cultural diversity.

Knockoff Experiences

In classrooms across the world, teachers grapple with AI-generated homework as students outsource their learning to chatbots. In the music world, services like Suno allow anyone to "create" songs without touching an instrument, potentially flooding streaming platforms with AI-generated tunes. These shortcuts threaten to replace genuine skill development and creative expression with shallow imitations that undermine personal growth.

Beyond the individual, human relationships may devolve as AI generates thank you notes, teen boys deep-fake nude images of their female classmates, and adults turn to AI partners for companionship instead of their flesh-and-blood peers.

Explainer video

You can watch or repurpose a video explaining this acronym on YouTube or via a downloadable 220MB MPEG-4 or 23MB WebM file.

You can watch or repurpose a video explaining this acronym on YouTube or via a downloadable 220MB MPEG-4 or 23MB WebM file.

Infographic

You can download a version of this page as a portrait-oriented PDF or PNG, or a landscape-oriented slide you can incorporate into a presentatation deck. You can find the original web page explaining the acronym at https://bit.ly/impactrisk.

You can download a version of this page as a portrait-oriented PDF or PNG, or a landscape-oriented slide you can incorporate into a presentatation deck. You can find the original web page explaining the acronym at https://bit.ly/impactrisk.

Quiz

View a Google Form quiz on this acronym and request access to copy and edit your own version.

Ecological impact

It can be hard to grasp AI's ecological impact given the lack of data and polarized opinions, so we created a list of 9 takeaways along with the What Uses More calculator with easy-to-understand comparisons of the energy and water requirements of different tasks, from prompting ChatGPT to generating an AI video.

It can be hard to grasp AI's ecological impact given the lack of data and polarized opinions, so we created a list of 9 takeaways along with the What Uses More calculator with easy-to-understand comparisons of the energy and water requirements of different tasks, from prompting ChatGPT to generating an AI video.

Discussion prompt about energy and water required for digital activities: slide with question slide with answer

Source media

Download a zipped folder containing individual acronym headings, illustrations, styled text, and an Adobe Illustrator source file to modify the framework to suit your own branding or needs.

Don't have access to the Adobe suite? Download an archive of vectorized icons, infographics, and a slide as Scalable Vector Graphics (SVG).

Course module

Download a lesson with these materials that can be imported into Canvas and other learning management systems via this .imscc file or via OER Commons or Canvas Commons. You can also copy the quiz text directly or download it as comma-separated-values from this Google Sheet.

Free to use or modify

This mnemonic and the infographics based on it have been released to the public domain for you to use freely or modify without permission, via a CC0 1.0 license.

Credits

The IMPACT RISK framework is a project of the Learning With AI initiative. Jon Ippolito drafted the mnemonic in conversation with GPT-4o and then revised it, wrote the descriptions, designed the infographics, and hand-coded this website. The image of a classroom with the words IMPACT RISK in the background was created with Stable Diffusion (model: Ideogram 1.0).

Although you don't need to cite work given to the public domain, (CC-0), here's an optional academic citation: Jon Ippolito, "IMPACT RISK acronym for AI downsides," Learning With AI, https://bit.ly/impactrisk, posted 5 August 2024. Copy citation to your clipboard.

Icons on this page are by the author or have been modified from theollowing sources on The Noun Project: Bomb by Humam; monopoly market by icon trip; Privacy by Adrien Coquet; Robot Arm automation by Ali Nur Rohman; Factory by Gregor Cresnar; Database bad data by Marz Gallery; injustice by Adrien Coquet; Blocky arrow and bar chart Graph Down by Yon ten.